- Retrait gratuit dans votre magasin Club

- 7.000.000 titres dans notre catalogue

- Payer en toute sécurité

- Toujours un magasin près de chez vous

- Retrait gratuit dans votre magasin Club

- 7.000.0000 titres dans notre catalogue

- Payer en toute sécurité

- Toujours un magasin près de chez vous

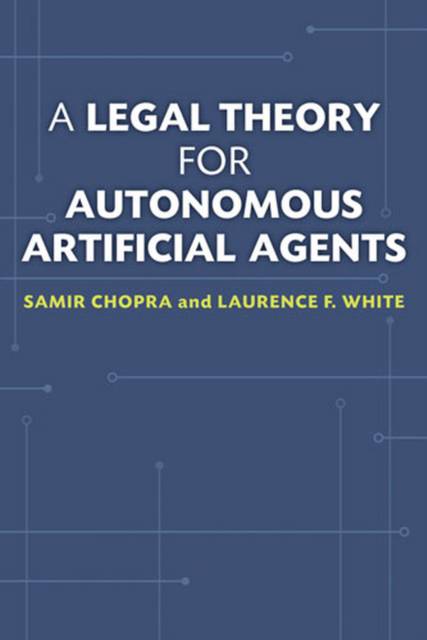

A Legal Theory for Autonomous Artificial Agents

Samir Chopra, Laurence F WhiteDescription

"An extraordinarily good synthesis from an amazing range of philosophical, legal, and technological sources . . . the book will appeal to legal academics and students, lawyers involved in e-commerce and cyberspace legal issues, technologists, moral philosophers, and intelligent lay readers interested in high tech issues, privacy, [and] robotics."

--Kevin Ashley, University of Pittsburgh School of Law

As corporations and government agencies replace human employees with online customer service and automated phone systems, we become accustomed to doing business with nonhuman agents. If artificial intelligence (AI) technology advances as today's leading researchers predict, these agents may soon function with such limited human input that they appear to act independently. When they achieve that level of autonomy, what legal status should they have?

Samir Chopra and Laurence F. White present a carefully reasoned discussion of how existing philosophy and legal theory can accommodate increasingly sophisticated AI technology. Arguing for the legal personhood of an artificial agent, the authors discuss what it means to say it has "knowledge" and the ability to make a decision. They consider key questions such as who must take responsibility for an agent's actions, whom the agent serves, and whether it could face a conflict of interest.

Spécifications

Parties prenantes

- Auteur(s) :

- Editeur:

Contenu

- Nombre de pages :

- 264

- Langue:

- Anglais

Caractéristiques

- EAN:

- 9780472051458

- Date de parution :

- 15-07-11

- Format:

- Livre broché

- Format numérique:

- Trade paperback (VS)

- Dimensions :

- 167 mm x 228 mm

- Poids :

- 403 g