- Retrait gratuit dans votre magasin Club

- 7.000.000 titres dans notre catalogue

- Payer en toute sécurité

- Toujours un magasin près de chez vous

- Retrait gratuit dans votre magasin Club

- 7.000.0000 titres dans notre catalogue

- Payer en toute sécurité

- Toujours un magasin près de chez vous

Description

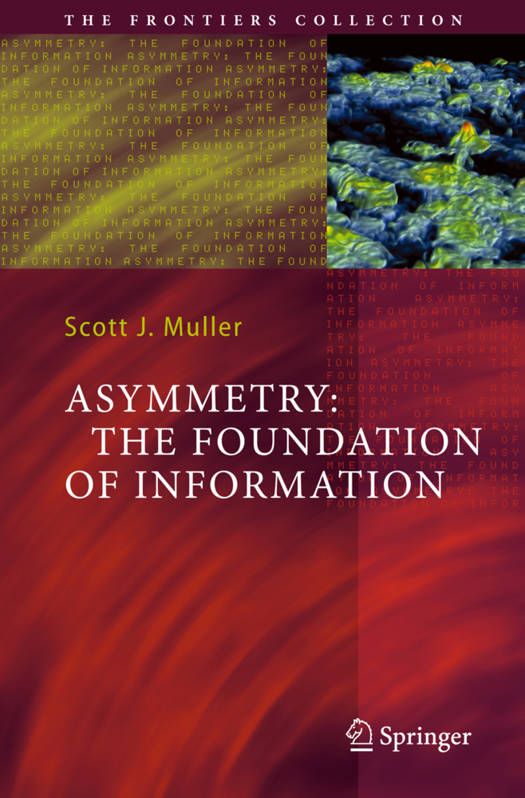

This book gathers concepts of information across diverse fields: physics, electrical engineering and computational science. It surveys current theories, discusses underlying notions of symmetry, and shows how the capacity of a system to distinguish itself relates to information. The author develops a formal methodology using group theory, leading to the application of Burnside's Lemma to count distinguishable states. This provides a tool to quantify complexity and information capacity in any physical system.

Written in an informal style, the book is equally accessible to researchers in fields of physics, chemistry, biology, computational science as well as many others.

Spécifications

Parties prenantes

- Auteur(s) :

- Editeur:

Contenu

- Nombre de pages :

- 165

- Langue:

- Anglais

- Collection :

Caractéristiques

- EAN:

- 9783540698838

- Date de parution :

- 15-05-07

- Format:

- Livre relié

- Format numérique:

- Genaaid

- Dimensions :

- 169 mm x 239 mm

- Poids :

- 421 g

Les avis

Nous publions uniquement les avis qui respectent les conditions requises. Consultez nos conditions pour les avis.