- Retrait gratuit dans votre magasin Club

- 7.000.000 titres dans notre catalogue

- Payer en toute sécurité

- Toujours un magasin près de chez vous

- Retrait gratuit dans votre magasin Club

- 7.000.0000 titres dans notre catalogue

- Payer en toute sécurité

- Toujours un magasin près de chez vous

Description

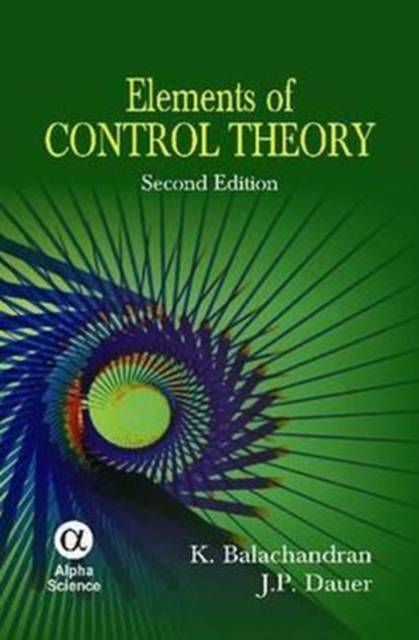

Studies the basic problems like observability, controllability, stability, Lyapunov stability, stabilizability and optimal control for dynamical systems represented by ordinary differential equations in a finite dimensional Euclidean space. The problems are also considered for nonlinear dynamical systems. The contents of the book are so organized as to serve as an introductory level text helping to understand the basic ingredients of control theory. A good number of examples are provided to illustrate the concepts and each chapter is supplemented by a set of exercises for the benefit of the students. The prerequisites are elementary courses in analysis, differential equations and the theory of matrices.

Spécifications

Parties prenantes

- Auteur(s) :

- Editeur:

Contenu

- Nombre de pages :

- 154

- Langue:

- Anglais

Caractéristiques

- EAN:

- 9781842656976

- Date de parution :

- 30-01-12

- Format:

- Livre relié

- Format numérique:

- Genaaid

- Dimensions :

- 160 mm x 240 mm

- Poids :

- 398 g

Les avis

Nous publions uniquement les avis qui respectent les conditions requises. Consultez nos conditions pour les avis.