- Retrait gratuit dans votre magasin Club

- 7.000.000 titres dans notre catalogue

- Payer en toute sécurité

- Toujours un magasin près de chez vous

- Retrait gratuit dans votre magasin Club

- 7.000.000 titres dans notre catalogue

- Payer en toute sécurité

- Toujours un magasin près de chez vous

Description

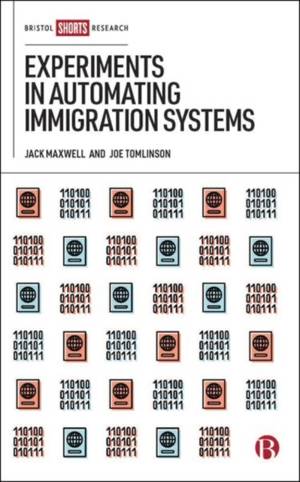

In recent years, the United Kingdom's Home Office has started using automated systems to make immigration decisions. These systems promise faster, more accurate, and cheaper decision-making, but in practice they have exposed people to distress, disruption, and even deportation.

This book identifies a pattern of risky experimentation with automated systems in the Home Office. It analyses three recent case studies including: a voice recognition system used to detect fraud in English-language testing; an algorithm for identifying 'risky' visa applications; and automated decision-making in the EU Settlement Scheme.

The book argues that a precautionary approach is essential to ensure that society benefits from government automation without exposing individuals to unacceptable risks.

Spécifications

Parties prenantes

- Auteur(s) :

- Editeur:

Contenu

- Nombre de pages :

- 130

- Langue:

- Anglais

Caractéristiques

- EAN:

- 9781529219845

- Date de parution :

- 25-01-22

- Format:

- Livre relié

- Format numérique:

- Ongenaaid / garenloos gebonden

- Dimensions :

- 127 mm x 203 mm

- Poids :

- 254 g