- Retrait gratuit dans votre magasin Club

- 7.000.000 titres dans notre catalogue

- Payer en toute sécurité

- Toujours un magasin près de chez vous

- Retrait gratuit dans votre magasin Club

- 7.000.0000 titres dans notre catalogue

- Payer en toute sécurité

- Toujours un magasin près de chez vous

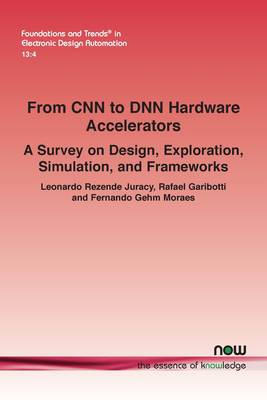

From CNN to Dnn Hardware Accelerators: A Survey on Design, Exploration, Simulation, and Frameworks

Leonardo Rezende Juracy, Rafael Garibotti, Fernando Gehm Moraes

88,45 €

+ 176 points

Description

The past decade has witnessed the consolidation of Artificial Intelligence technology, thanks to the popularization of Machine Learning (ML) models. The technological boom of ML models started in 2012 when the world was stunned by the record-breaking classification performance achieved by combining an ML model with a high computational performance graphic processing unit (GPU). Since then, ML models received ever-increasing attention, being applied in different areas such as computational vision, virtual reality, voice assistants, chatbots, and self-driving vehicles. The most popular ML models are brain-inspired models such as Neural Networks (NNs), including Convolutional Neural Networks (CNNs) and, more recently, Deep Neural Networks (DNNs). They are characterized by resembling the human brain, performing data processing by mimicking synapses using thousands of interconnected neurons in a network. In this growing environment, GPUs have become the de facto reference platform for the training and inference phases of CNNs and DNNs, due to their high processing parallelism and memory bandwidth. However, GPUs are power-hungry architectures. To enable the deployment of CNN and DNN applications on energy-constrained devices (e.g., IoT devices), industry and academic research have moved towards hardware accelerators. Following the evolution of neural networks from CNNs to DNNs, this monograph sheds light on the impact of this architectural shift and discusses hardware accelerator trends in terms of design, exploration, simulation, and frameworks developed in both academia and industry.

Spécifications

Parties prenantes

- Auteur(s) :

- Editeur:

Contenu

- Nombre de pages :

- 88

- Langue:

- Anglais

- Collection :

Caractéristiques

- EAN:

- 9781638281627

- Date de parution :

- 06-03-23

- Format:

- Livre broché

- Format numérique:

- Trade paperback (VS)

- Dimensions :

- 156 mm x 234 mm

- Poids :

- 136 g

Les avis

Nous publions uniquement les avis qui respectent les conditions requises. Consultez nos conditions pour les avis.