- Retrait gratuit dans votre magasin Club

- 7.000.000 titres dans notre catalogue

- Payer en toute sécurité

- Toujours un magasin près de chez vous

- Retrait gratuit dans votre magasin Club

- 7.000.000 titres dans notre catalogue

- Payer en toute sécurité

- Toujours un magasin près de chez vous

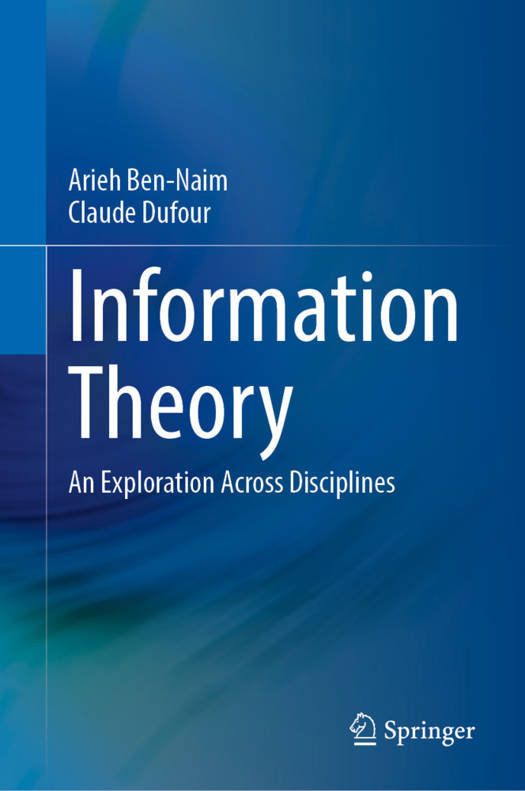

Information Theory

An Exploration Across Disciplines

Arieh Ben-Naim, Claude DufourDescription

This monograph explores the interdisciplinary applications of information theory, focusing on the concepts of entropy, mutual information, and their implications in various fields. It explains the fundamental differences between entropy and Shannon's Measure of Information (SMI), presents the application of information theory to living systems and psychology, and also discusses the role of entropy in art. It critically overviews the definition of correlations and multivariate mutual information.These notions are used to build a new perspective for understanding the irreversibility of processes in macroscopic systems, while the dynamical laws governing the microscopic components are reversible. It also delves into the use of mutual information in linguistics, cryptography, steganography, and communication systems. The book details the theoretical and practical aspects of information theory across a spectrum of disciplines and is a useful tool for any scientist interested in what is usually called entropy.

Spécifications

Parties prenantes

- Auteur(s) :

- Editeur:

Contenu

- Nombre de pages :

- 248

- Langue:

- Anglais

Caractéristiques

- EAN:

- 9783031677465

- Date de parution :

- 13-12-24

- Format:

- Livre relié

- Format numérique:

- Genaaid

- Dimensions :

- 156 mm x 234 mm

- Poids :

- 548 g