- Retrait gratuit dans votre magasin Club

- 7.000.000 titres dans notre catalogue

- Payer en toute sécurité

- Toujours un magasin près de chez vous

- Retrait gratuit dans votre magasin Club

- 7.000.0000 titres dans notre catalogue

- Payer en toute sécurité

- Toujours un magasin près de chez vous

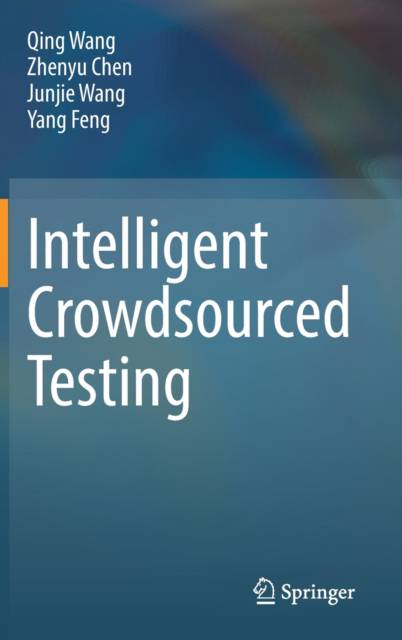

Intelligent Crowdsourced Testing

Qing Wang, Zhenyu Chen, Junjie Wang, Yang FengDescription

Crowdsourced testing is an emerging paradigm that can improve the cost-effectiveness of software testing and accelerate the process, especially for mobile applications. It entrusts testing tasks to online crowdworkers whose diverse testing devices/contexts, experience, and skill sets can significantly contribute to more reliable, cost-effective and efficient testing results. It has already been adopted by many software organizations, including Google, Facebook, Amazon and Microsoft.

This book provides an intelligent overview of crowdsourced testing research and practice. It employs machine learning, data mining, and deep learning techniques to process the data generated during the crowdsourced testing process, to facilitate the management of crowdsourced testing, and to improve the quality of crowdsourced testing.

Spécifications

Parties prenantes

- Auteur(s) :

- Editeur:

Contenu

- Nombre de pages :

- 251

- Langue:

- Anglais

Caractéristiques

- EAN:

- 9789811696428

- Date de parution :

- 17-06-22

- Format:

- Livre relié

- Format numérique:

- Genaaid

- Dimensions :

- 156 mm x 234 mm

- Poids :

- 553 g

Les avis

Nous publions uniquement les avis qui respectent les conditions requises. Consultez nos conditions pour les avis.