- Retrait gratuit dans votre magasin Club

- 7.000.000 titres dans notre catalogue

- Payer en toute sécurité

- Toujours un magasin près de chez vous

- Retrait gratuit dans votre magasin Club

- 7.000.0000 titres dans notre catalogue

- Payer en toute sécurité

- Toujours un magasin près de chez vous

Description

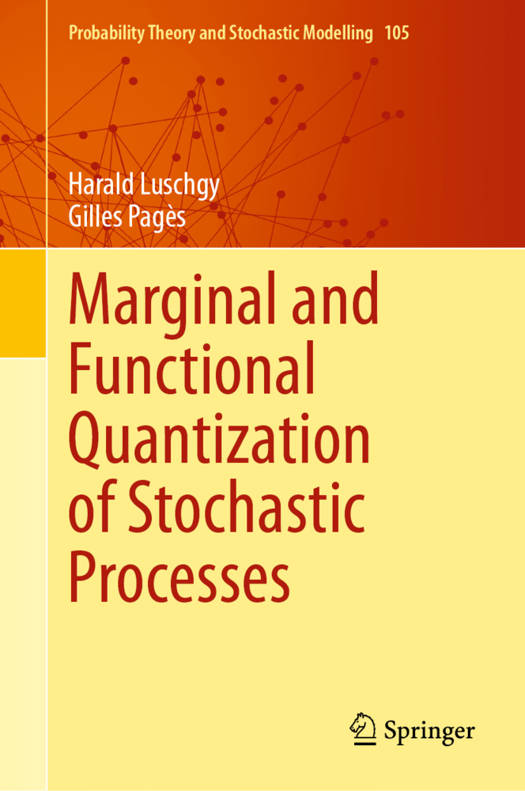

Vector Quantization, a pioneering discretization method based on nearest neighbor search, emerged in the 1950s primarily in signal processing, electrical engineering, and information theory. Later in the 1960s, it evolved into an automatic classification technique for generating prototypes of extensive datasets. In modern terms, it can be recognized as a seminal contribution to unsupervised learning through the k-means clustering algorithm in data science.

In contrast, Functional Quantization, a more recent area of study dating back to the early 2000s, focuses on the quantization of continuous-time stochastic processes viewed as random vectors in Banach function spaces. This book distinguishes itself by delving into the quantization of random vectors with values in a Banach space--a unique feature of its content.

Its main objectives are twofold: first, to offer a comprehensive and cohesive overview of the latest developments as well as several new results in optimal quantization theory, spanning both finite and infinite dimensions, building upon the advancements detailed in Graf and Luschgy's Lecture Notes volume. Secondly, it serves to demonstrate how optimal quantization can be employed as a space discretization method within probability theory and numerical probability, particularly in fields like quantitative finance. The main applications to numerical probability are the controlled approximation of regular and conditional expectations by quantization-based cubature formulas, with applications to time-space discretization of Markov processes, typically Brownian diffusions, by quantization trees.

While primarily catering to mathematicians specializing in probability theory and numerical probability, this monograph also holds relevance for data scientists, electrical engineers involved in data transmission, and professionals in economics and logistics who are intrigued by optimal allocation problems.

Spécifications

Parties prenantes

- Auteur(s) :

- Editeur:

Contenu

- Nombre de pages :

- 912

- Langue:

- Anglais

- Collection :

- Tome:

- n° 105

Caractéristiques

- EAN:

- 9783031454639

- Date de parution :

- 07-12-23

- Format:

- Livre relié

- Format numérique:

- Genaaid

- Dimensions :

- 162 mm x 236 mm

- Poids :

- 1700 g

Les avis

Nous publions uniquement les avis qui respectent les conditions requises. Consultez nos conditions pour les avis.