- Retrait gratuit dans votre magasin Club

- 7.000.000 titres dans notre catalogue

- Payer en toute sécurité

- Toujours un magasin près de chez vous

- Retrait gratuit dans votre magasin Club

- 7.000.000 titres dans notre catalogue

- Payer en toute sécurité

- Toujours un magasin près de chez vous

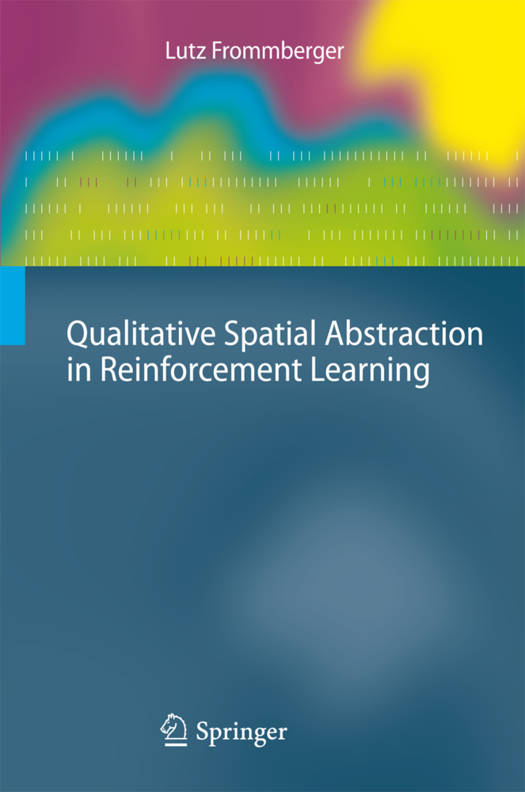

Qualitative Spatial Abstraction in Reinforcement Learning

Lutz FrommbergerDescription

Reinforcement learning has developed as a successful learning approach for domains that are not fully understood and that are too complex to be described in closed form. However, reinforcement learning does not scale well to large and continuous problems. Furthermore, acquired knowledge specific to the learned task, and transfer of knowledge to new tasks is crucial.

In this book the author investigates whether deficiencies of reinforcement learning can be overcome by suitable abstraction methods. He discusses various forms of spatial abstraction, in particular qualitative abstraction, a form of representing knowledge that has been thoroughly investigated and successfully applied in spatial cognition research. With his approach, he exploits spatial structures and structural similarity to support the learning process by abstracting from less important features and stressing the essential ones. The author demonstrates his learning approach and the transferability of knowledge by having his system learn in a virtual robot simulation system and consequently transfer the acquired knowledge to a physical robot. The approach is influenced by findings from cognitive science.

The book is suitable for researchers working in artificial intelligence, in particular knowledge representation, learning, spatial cognition, and robotics.

Spécifications

Parties prenantes

- Auteur(s) :

- Editeur:

Contenu

- Nombre de pages :

- 174

- Langue:

- Anglais

- Collection :

Caractéristiques

- EAN:

- 9783642165894

- Date de parution :

- 12-11-10

- Format:

- Livre relié

- Format numérique:

- Genaaid

- Dimensions :

- 155 mm x 234 mm

- Poids :

- 399 g