- Retrait gratuit dans votre magasin Club

- 7.000.000 titres dans notre catalogue

- Payer en toute sécurité

- Toujours un magasin près de chez vous

- Retrait gratuit dans votre magasin Club

- 7.000.0000 titres dans notre catalogue

- Payer en toute sécurité

- Toujours un magasin près de chez vous

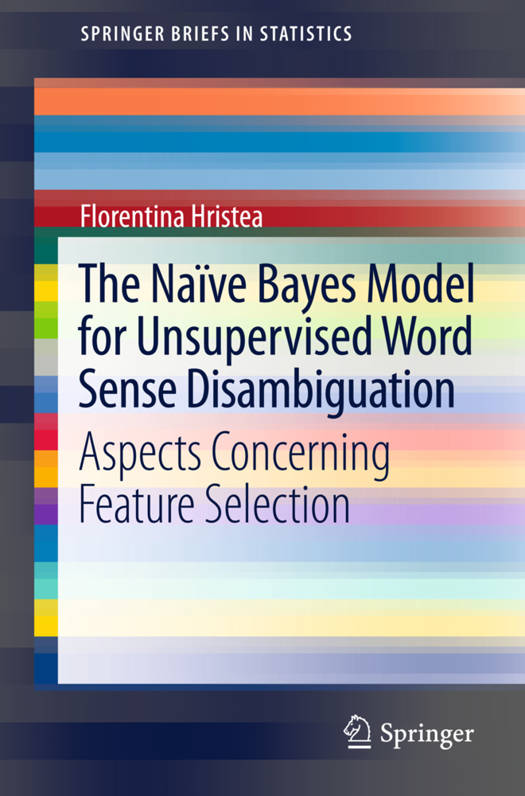

The Naïve Bayes Model for Unsupervised Word Sense Disambiguation

Aspects Concerning Feature Selection

Florentina T HristeaDescription

This book presents recent advances (from 2008 to 2012) concerning use of the Naïve Bayes model in unsupervised word sense disambiguation (WSD).

While WSD, in general, has a number of important applications in various fields of artificial intelligence (information retrieval, text processing, machine translation, message understanding, man-machine communication etc.), unsupervised WSD is considered important because it is language-independent and does not require previously annotated corpora. The Naïve Bayes model has been widely used in supervised WSD, but its use in unsupervised WSD has led to more modest disambiguation results and has been less frequent. It seems that the potential of this statistical model with respect to unsupervised WSD continues to remain insufficiently explored.

The present book contends that the Naïve Bayes model needs to be fed knowledge in order to perform well as a clustering technique for unsupervised WSD and examines three entirely different sources of such knowledge for feature selection: WordNet, dependency relations and web N-grams. WSD with an underlying Naïve Bayes model is ultimately positioned on the border between unsupervised and knowledge-based techniques. The benefits of feeding knowledge (of various natures) to a knowledge-lean algorithm for unsupervised WSD that uses the Naïve Bayes model as clustering technique are clearly highlighted. The discussion shows that the Naïve Bayes model still holds promise for the open problem of unsupervised WSD.

Spécifications

Parties prenantes

- Auteur(s) :

- Editeur:

Contenu

- Nombre de pages :

- 70

- Langue:

- Anglais

- Collection :

Caractéristiques

- EAN:

- 9783642336928

- Date de parution :

- 08-11-12

- Format:

- Livre broché

- Format numérique:

- Trade paperback (VS)

- Dimensions :

- 156 mm x 234 mm

- Poids :

- 145 g

Les avis

Nous publions uniquement les avis qui respectent les conditions requises. Consultez nos conditions pour les avis.