- Retrait gratuit dans votre magasin Club

- 7.000.000 titres dans notre catalogue

- Payer en toute sécurité

- Toujours un magasin près de chez vous

- Retrait gratuit dans votre magasin Club

- 7.000.0000 titres dans notre catalogue

- Payer en toute sécurité

- Toujours un magasin près de chez vous

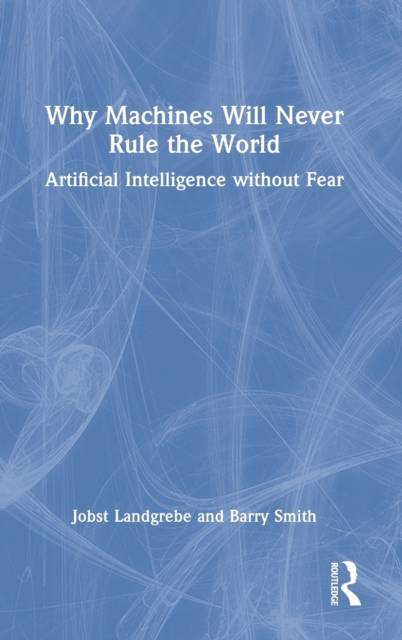

Why Machines Will Never Rule the World

Artificial Intelligence Without Fear

Jobst Landgrebe, Barry SmithDescription

The book's core argument is that an artificial intelligence that could equal or exceed human intelligence--sometimes called artificial general intelligence (AGI)--is for mathematical reasons impossible. It offers two specific reasons for this claim:

- Human intelligence is a capability of a complex dynamic system--the human brain and central nervous system.

- Systems of this sort cannot be modelled mathematically in a way that allows them to operate inside a computer.

In supporting their claim, the authors, Jobst Landgrebe and Barry Smith, marshal evidence from mathematics, physics, computer science, philosophy, linguistics, and biology, setting up their book around three central questions: What are the essential marks of human intelligence? What is it that researchers try to do when they attempt to achieve "artificial intelligence" (AI)? And why, after more than 50 years, are our most common interactions with AI, for example with our bank's computers, still so unsatisfactory?

Landgrebe and Smith show how a widespread fear about AI's potential to bring about radical changes in the nature of human beings and in the human social order is founded on an error. There is still, as they demonstrate in a final chapter, a great deal that AI can achieve which will benefit humanity. But these benefits will be achieved without the aid of systems that are more powerful than humans, which are as impossible as AI systems that are intrinsically "evil" or able to "will" a takeover of human society.

Spécifications

Parties prenantes

- Auteur(s) :

- Editeur:

Contenu

- Nombre de pages :

- 342

- Langue:

- Anglais

Caractéristiques

- EAN:

- 9781032315164

- Date de parution :

- 12-08-22

- Format:

- Livre relié

- Format numérique:

- Genaaid

- Dimensions :

- 152 mm x 229 mm

- Poids :

- 644 g

Les avis

Nous publions uniquement les avis qui respectent les conditions requises. Consultez nos conditions pour les avis.