- Retrait gratuit dans votre magasin Club

- 7.000.000 titres dans notre catalogue

- Payer en toute sécurité

- Toujours un magasin près de chez vous

- Retrait gratuit dans votre magasin Club

- 7.000.0000 titres dans notre catalogue

- Payer en toute sécurité

- Toujours un magasin près de chez vous

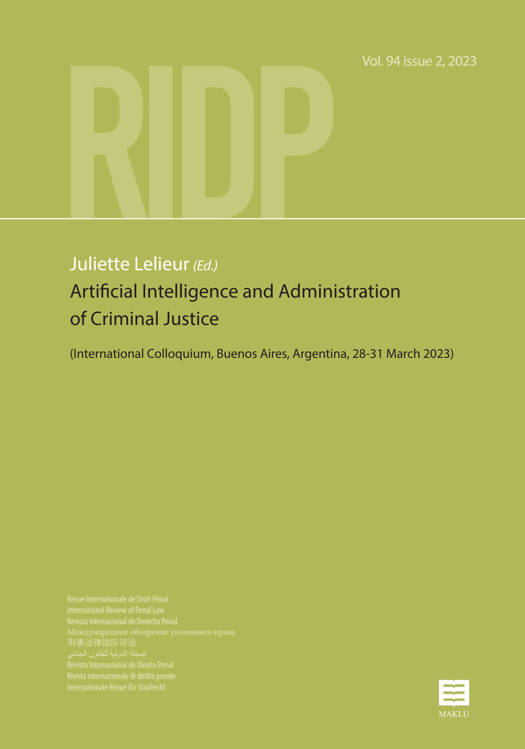

Artificial Intelligence and Administration of Criminal Justice

International Colloqium, Buenos Aires, Argentina 28th-31st March 2023

Lelieur JulietteDescription

Artificial Intelligence systems are used today in several parts of the world to support the administration of criminal justice. The most widespread example concerns “predictive policing”, which aims at foretelling crime before it happens and improving its detection. AI allows geospatial as well as person-based policing and is involved in preventing and uncovering economic crimes such as fraud and money laundering.

Especially in the context of crime mapping – or hot-spot analysis –, its efficiency has been questioned. As its compliance with human rights is also critically debated, some countries have renounced or ceased to rely on it. Another kind of general surveillance of human activity has however emerged with the performance of machine learning in facial recognition technology.

In contrast, the use of risk assessment tools based on AI by judicial authorities to forecast recidivism has remained limited to a few countries. Nevertheless, a new aspect of so-called “predictive justice” is currently arising, not to foretell the forthcoming behavior of a suspected or condemned person, but surprisingly the decision of judicial bodies themselves, based largely on their former decisions. Legal quantitative analysis is a new achievement, due to AI but raises serious concerns. It may radically change the role of judges and lawyers in the course of criminal justice. Not only does it put several human rights in tension but also does it challenge the very meaning of human intervention in implementing criminal law.

The final intrusion of AI into the administration of criminal justice, addressed here, concerns evidence matters. AI tools help investigation authorities gather and correlate large volumes of data and improve the exploitation of manifold sorts of digital information. It also produces statistical evaluations that may be valuable for forensic purposes, particularly to identify persons based on facial recognition, vocal recognition, and probabilistic genotyping. Whether these results are admissible in courts, and to what conditions – including technical reliability and fair trial issues – they may be proffered as evidence, is an unsolved question for now.

This volume reviews the various uses of AI in the different stages of the criminal process from a country-comparative approach. It addresses the fundamental questions that this new technology raises when confronted with the guarantees of due process, fair trial, and other relevant human rights. It also presents the 32 resolutions that a team of twenty professors of criminal law, representing various legal traditions and parts of the world, have agreed upon to ensure that the use of AI is in line with the essential principles of criminal procedural law and with a fair justice system.

Juliette Lelieur is a Professor of Criminal Law at the University of Strasbourg, France.

Spécifications

Parties prenantes

- Auteur(s) :

- Editeur:

Contenu

- Nombre de pages :

- 394

- Langue:

- Anglais, Français

- Collection :

Caractéristiques

- EAN:

- 9789046612248

- Date de parution :

- 20-12-23

- Format:

- Livre broché

- Dimensions :

- 170 mm x 240 mm

Les avis

Nous publions uniquement les avis qui respectent les conditions requises. Consultez nos conditions pour les avis.