- Retrait gratuit dans votre magasin Club

- 7.000.000 titres dans notre catalogue

- Payer en toute sécurité

- Toujours un magasin près de chez vous

- Retrait gratuit dans votre magasin Club

- 7.000.0000 titres dans notre catalogue

- Payer en toute sécurité

- Toujours un magasin près de chez vous

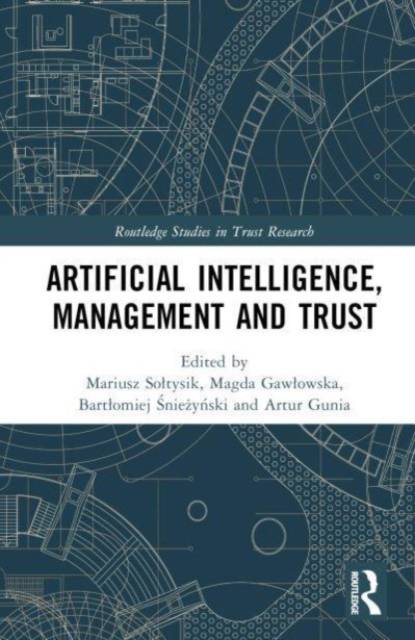

Artificial Intelligence, Management and Trust

Description

The main challenge related to the development of artificial intelligence (AI) is to establish harmonious human-AI relations, necessary for the proper use of its potential. AI will eventually transform many businesses and industries; its pace of development is influenced by the lack of trust on the part of society. AI autonomous decision-making is still in its infancy, but use cases are evolving at an ever-faster pace. Over time, AI will be responsible for making more decisions, and those decisions will be of greater importance.

The monograph aims to comprehensively describe AI technology in three aspects: organizational, psychological, and technological in the context of the increasingly bold use of this technology in management. Recognizing the differences between trust in people and AI agents and identifying the key psychological factors that determine the development of trust in AI is crucial for the development of modern Industry 4.0 organizations. So far, little is known about trust in human-AI relationships and almost nothing about the psychological mechanisms involved. The monograph will contribute to a better understanding of how trust is built between people and AI agents, what makes AI agents trustworthy, and how their morality is assessed. It will therefore be of interest to researchers, academics, practitioners, and advanced students with an interest in trust research, management of technology and innovation, and organizational management.

Spécifications

Parties prenantes

- Editeur:

Contenu

- Nombre de pages :

- 210

- Langue:

- Anglais

- Collection :

Caractéristiques

- EAN:

- 9781032317939

- Date de parution :

- 01-09-23

- Format:

- Livre relié

- Format numérique:

- Genaaid

- Dimensions :

- 152 mm x 229 mm

- Poids :

- 467 g

Les avis

Nous publions uniquement les avis qui respectent les conditions requises. Consultez nos conditions pour les avis.