En raison d'une grêve chez bpost, votre commande pourrait être retardée. Vous avez besoin d’un livre rapidement ? Nos magasins vous accueillent à bras ouverts !

- Retrait gratuit dans votre magasin Club

- 7.000.000 titres dans notre catalogue

- Payer en toute sécurité

- Toujours un magasin près de chez vous

En raison de la grêve chez bpost, votre commande pourrait être retardée. Vous avez besoin d’un livre rapidement ? Nos magasins vous accueillent à bras ouverts !

- Retrait gratuit dans votre magasin Club

- 7.000.0000 titres dans notre catalogue

- Payer en toute sécurité

- Toujours un magasin près de chez vous

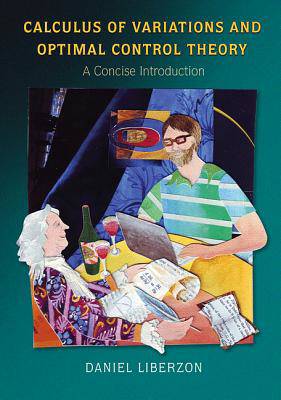

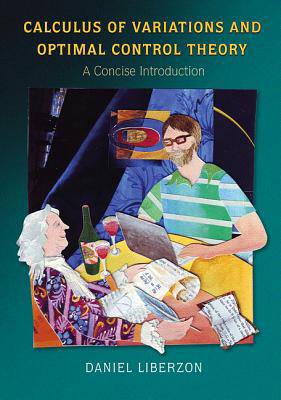

Calculus of Variations and Optimal Control Theory

A Concise Introduction

Daniel Liberzon

Livre relié | Anglais

153,45 €

+ 306 points

Description

This textbook offers a concise yet rigorous introduction to calculus of variations and optimal control theory, and is a self-contained resource for graduate students in engineering, applied mathematics, and related subjects. Designed specifically for a one-semester course, the book begins with calculus of variations, preparing the ground for optimal control. It then gives a complete proof of the maximum principle and covers key topics such as the Hamilton-Jacobi-Bellman theory of dynamic programming and linear-quadratic optimal control.

Calculus of Variations and Optimal Control Theory also traces the historical development of the subject and features numerous exercises, notes and references at the end of each chapter, and suggestions for further study.

- Offers a concise yet rigorous introduction

- Requires limited background in control theory or advanced mathematics

- Provides a complete proof of the maximum principle

- Uses consistent notation in the exposition of classical and modern topics

- Traces the historical development of the subject

- Solutions manual (available only to teachers)

Leading universities that have adopted this book include:

- University of Illinois at Urbana-Champaign ECE 553: Optimum Control Systems

- Georgia Institute of Technology ECE 6553: Optimal Control and Optimization

- University of Pennsylvania ESE 680: Optimal Control Theory

- University of Notre Dame EE 60565: Optimal Control

Spécifications

Parties prenantes

- Auteur(s) :

- Editeur:

Contenu

- Nombre de pages :

- 256

- Langue:

- Anglais

Caractéristiques

- EAN:

- 9780691151878

- Date de parution :

- 08-01-12

- Format:

- Livre relié

- Format numérique:

- Ongenaaid / garenloos gebonden

- Dimensions :

- 178 mm x 257 mm

- Poids :

- 680 g

Les avis

Nous publions uniquement les avis qui respectent les conditions requises. Consultez nos conditions pour les avis.