- Retrait gratuit dans votre magasin Club

- 7.000.000 titres dans notre catalogue

- Payer en toute sécurité

- Toujours un magasin près de chez vous

- Retrait gratuit dans votre magasin Club

- 7.000.0000 titres dans notre catalogue

- Payer en toute sécurité

- Toujours un magasin près de chez vous

Description

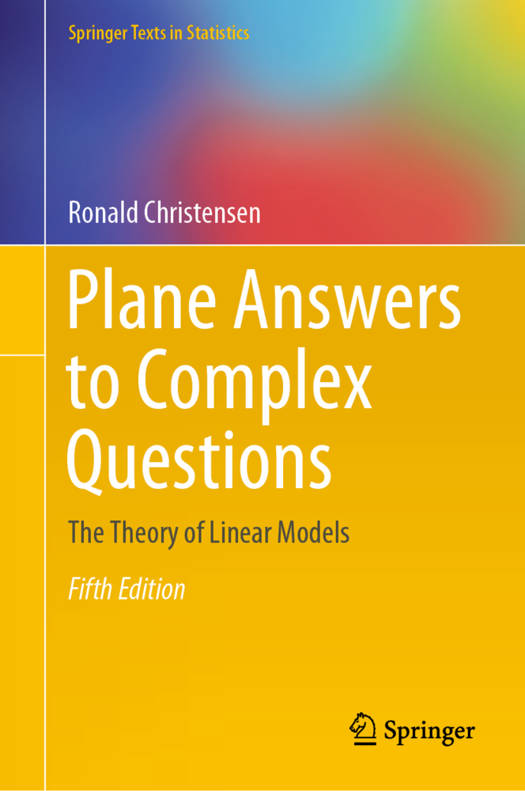

This textbook provides a wide-ranging introduction to the use and theory of linear models for analyzing data. The author's emphasis is on providing a unified treatment of linear models, including analysis of variance models and regression models, based on projections, orthogonality, and other vector space ideas. Every chapter comes with numerous exercises and examples that make it ideal for a graduate-level course. All of the standard topics are covered in depth: estimation including biased and Bayesian estimation, significance testing, ANOVA, multiple comparisons, regression analysis, and experimental design models. In addition, the book covers topics that are not usually treated at this level, but which are important in their own right: best linear and best linear unbiased prediction, split plot models, balanced incomplete block designs, testing for lack of fit, testing for independence, models with singular covariance matrices, diagnostics, collinearity, and variable selection. This new edition includes new sections on alternatives to least squares estimation and the variance-bias tradeoff, expanded discussion of variable selection, new material on characterizing the interaction space in an unbalanced two-way ANOVA, Freedman's critique of the sandwich estimator, and much more.

Spécifications

Parties prenantes

- Auteur(s) :

- Editeur:

Contenu

- Nombre de pages :

- 529

- Langue:

- Anglais

- Collection :

Caractéristiques

- EAN:

- 9783030320966

- Date de parution :

- 13-03-20

- Format:

- Livre relié

- Format numérique:

- Genaaid

- Dimensions :

- 156 mm x 234 mm

- Poids :

- 943 g

Les avis

Nous publions uniquement les avis qui respectent les conditions requises. Consultez nos conditions pour les avis.