- Retrait gratuit dans votre magasin Club

- 7.000.000 titres dans notre catalogue

- Payer en toute sécurité

- Toujours un magasin près de chez vous

- Retrait gratuit dans votre magasin Club

- 7.000.0000 titres dans notre catalogue

- Payer en toute sécurité

- Toujours un magasin près de chez vous

94,95 €

+ 189 points

Format

Description

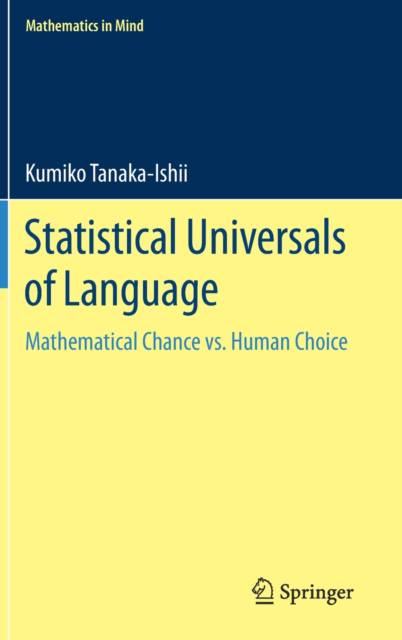

This volume explores the universal mathematical properties underlying big language data and possible reasons why such properties exist, revealing how we may be unconsciously mathematical in our language use. These properties are statistical and thus different from linguistic universals that contribute to describing the variation of human languages, and they can only be identified over a large accumulation of usages. The book provides an overview of state-of-the art findings on these statistical universals and reconsiders the nature of language accordingly, with Zipf's law as a well-known example.

The main focus of the book further lies in explaining the property of long memory, which was discovered and studied more recently by borrowing concepts from complex systems theory. The statistical universals not only possibly lie as the precursor of language system formation, but they also highlight the qualities of language that remain weak points in today's machine learning.

In summary, this book provides an overview of language's global properties. It will be of interest to anyone engaged in fields related to language and computing or statistical analysis methods, with an emphasis on researchers and students in computational linguistics and natural language processing. While the book does apply mathematical concepts, all possible effort has been made to speak to a non-mathematical audience as well by communicating mathematical content intuitively, with concise examples taken from real texts.

The main focus of the book further lies in explaining the property of long memory, which was discovered and studied more recently by borrowing concepts from complex systems theory. The statistical universals not only possibly lie as the precursor of language system formation, but they also highlight the qualities of language that remain weak points in today's machine learning.

In summary, this book provides an overview of language's global properties. It will be of interest to anyone engaged in fields related to language and computing or statistical analysis methods, with an emphasis on researchers and students in computational linguistics and natural language processing. While the book does apply mathematical concepts, all possible effort has been made to speak to a non-mathematical audience as well by communicating mathematical content intuitively, with concise examples taken from real texts.

Spécifications

Parties prenantes

- Auteur(s) :

- Editeur:

Contenu

- Nombre de pages :

- 236

- Langue:

- Anglais

- Collection :

Caractéristiques

- EAN:

- 9783030593766

- Date de parution :

- 02-04-21

- Format:

- Livre relié

- Format numérique:

- Genaaid

- Dimensions :

- 156 mm x 234 mm

- Poids :

- 530 g

Les avis

Nous publions uniquement les avis qui respectent les conditions requises. Consultez nos conditions pour les avis.